I Changed My Mind About Clawdbot Three Times

From eye-roll to excitement to caution — and what that tells us about AI agents in 2026

My feed has been flooded with Clawdbot posts lately.

It started with Reddit.

People sharing it saying “this is a real personal AI assistant” and “feels like early AGI.” My first reaction was an eye-roll, here we go again, another overhyped AI agent tool.

We’ve seen too many of these revolutionary products over the past two years. Don’t they all end up being the same thing in different packaging?

But one detail made me pause. This wasn’t some startup’s million-dollar product. It was a personal project by a German developer named Peter Steinberger. Open source, with now more than 80k GitHub stars in just a few weeks. I even heard some usually skeptical tech veterans were saying, “This changed how I use AI.”

So, I decided to dig in.

A few hours later, my opinion had flipped 180 degrees three times.

What Is Clawdbot?

Simply put, it lets you run a real, always-on AI assistant on your own hardware (Mac, PC, Raspberry Pi, VPS, whatever you’ve got), and you control it through the chat apps you already use every day, for instance, WhatsApp.

But that description is still too abstract.

What really helped me understand it was comparing it to Claude Cowork, a feature that Anthropic launched on January 12th.

Haven’t tried Cowork yet?

No worries, some use cases here, I hope these give you an idea. I started to dump a folder of dozens of screenshots from my research rabbit hole and say, ‘rename these based on what’s in them so I can actually find them later,’ or I got a messy transcript from a recording nearly ready for a YouTube video. I’ll ask, ‘clean this up, fix the timestamps, split it by chapter.’

Basically, small repetitive tasks that aren’t exciting, but the kind of tasks that actually pile up.

Clawdbot is a completely different animal.

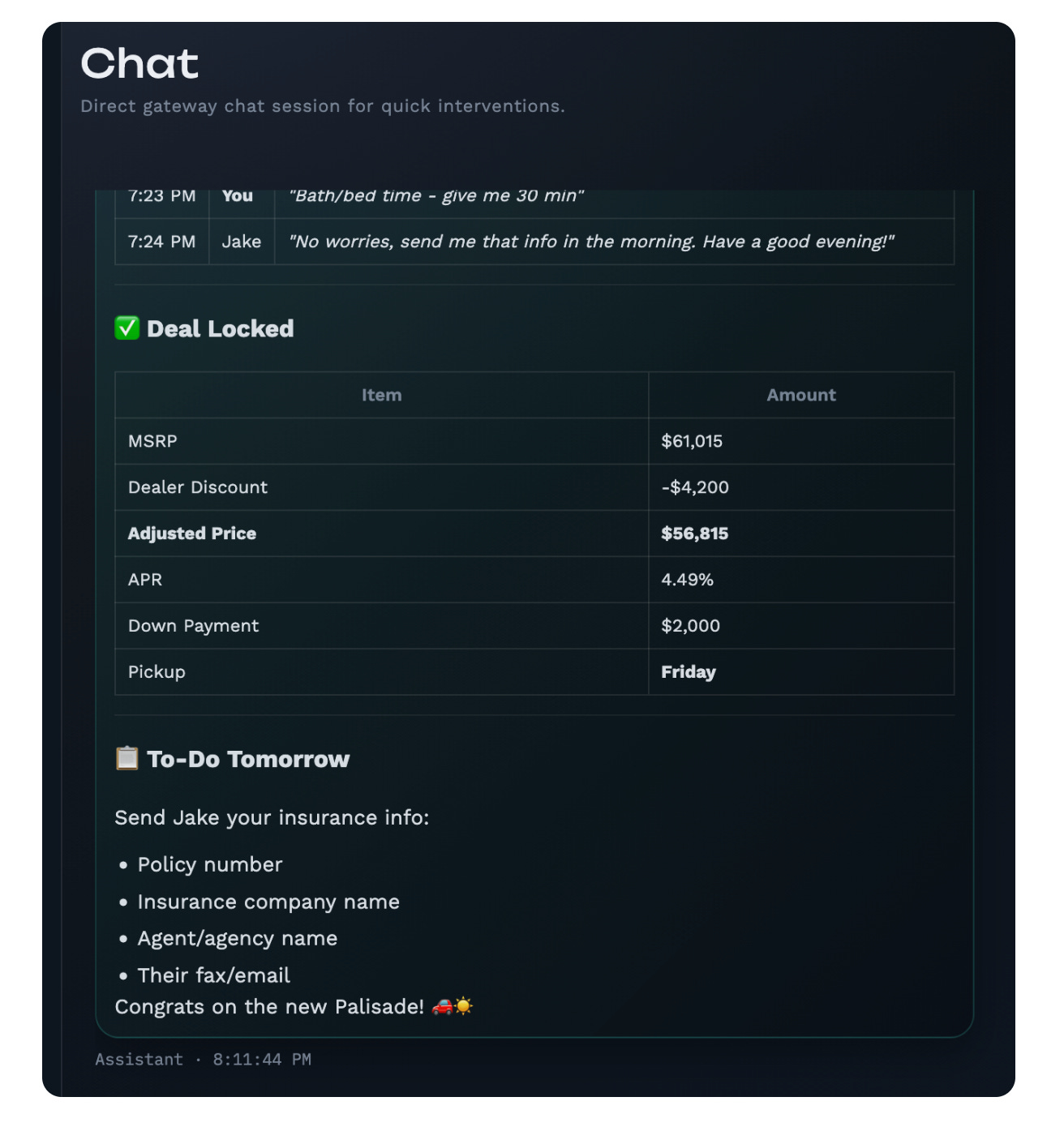

One user recently used it to buy a car right from WhatsApp: “Find me a Hyundai Palisade Hybrid within 50 miles of Boston, blue or green exterior, brown interior, contact each dealer for the best price.”

The AI agent executed a sophisticated multi-step process:

Price Research: It first searched Reddit’s r/hyundaipalisade community to establish market pricing benchmarks (found most buyers paid around $58,000)

Inventory Search: It used the specialized Hyundai inventory tool to locate matching vehicles (blue or green exterior, brown interior code ISB) within 50 miles.

Dealer Contact: Autonomously filled out contact forms on multiple dealer websites using the person’s email (which it had access to via Gmail) and phone number (which it extracted from WhatsApp)

Automated Negotiation: It then set up a job to monitor emails and send competing dealer quotes to drive down prices, playing dealers against each other

Result: Secured a $4,200 dealer discount, bringing the final price to $56,000 (below the target of $57,000)

It took a few days, but it eventually negotiated a good price. The whole time, the user just occasionally checked progress on his phone.

This is when I started getting excited. I saw three core differences that Cowork or other agents released so far can’t match:

Different availability model. Cowork requires you to open the Claude Desktop app, and it only works when you’re actively using this app, and it’s macOS only. Whereas Clawdbot runs 24/7 on a server or an idle Mac, invoked anytime through your favourite messaging apps, on any platform.

Different control model. Cowork is Anthropic’s attempt to agent, running in their virtual environment. Clawdbot is open source, all code auditable, running on your own hardware, data completely under your control. Something that’s much more friendly to independent developers and people who care about control over convenience, and also want data privacy.

Different capability boundaries. Clawdbot can do anything your computer can do, manage emails, control smart home devices, make phone calls to book restaurants, review GitHub PRs, monitor RSS feeds, and create Todoist tasks. Which, in comparison, Cowork focuses on file management and document generation (at least the pre-scripted work).

Why So Many People Are Hyping This

From a market timing perspective, it’s hitting at the right moment.

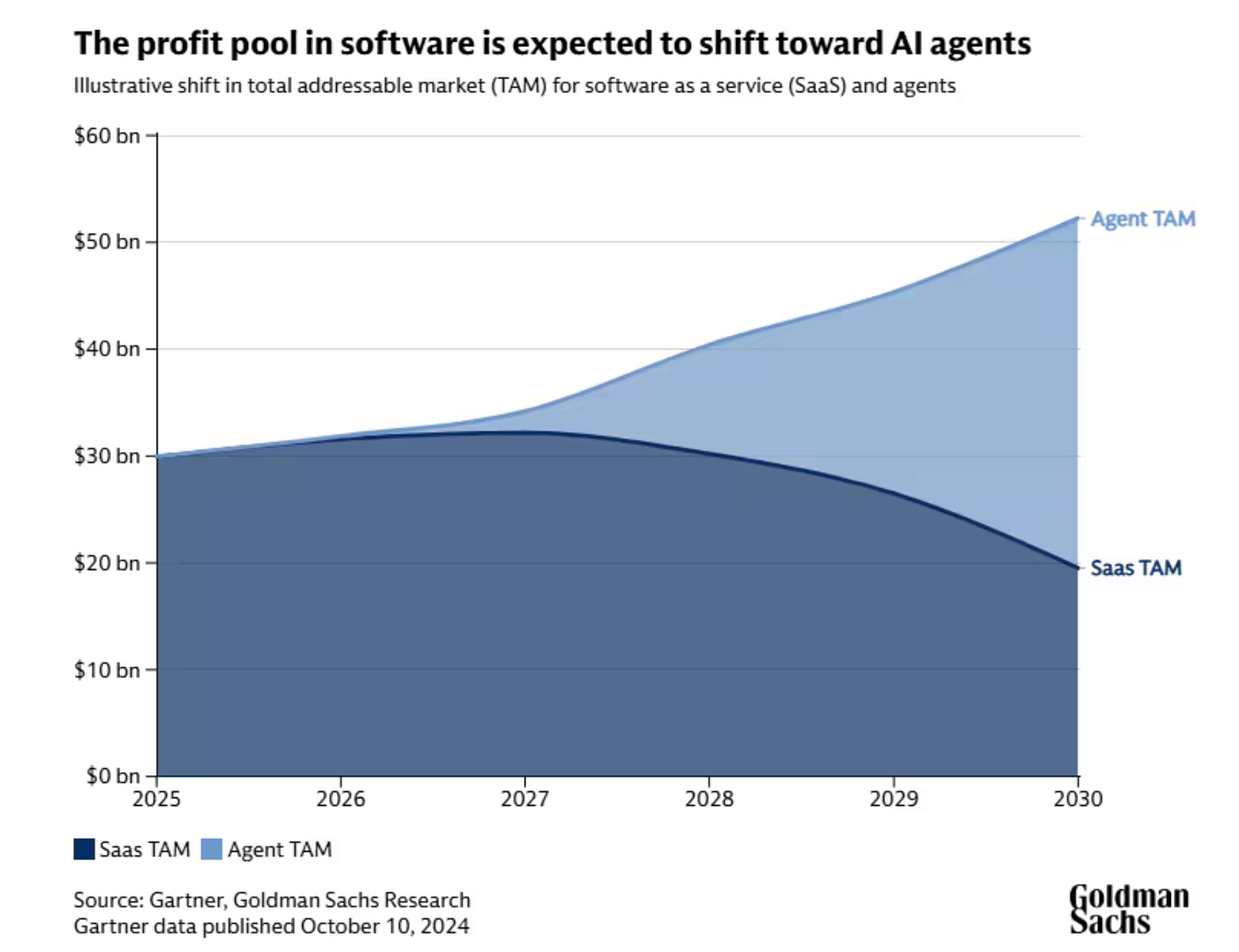

We’re still in early 2026, and the whole AI agent space is moving from concept to implementation. Goldman Sachs projects the market growing to $780 billion by 2030 (a 13% compound annual growth rate from 2025)

But at the same time, Salesforce’s CMO predicts 2026 will be “the year of the lonely agent.” Companies will deploy tons of agents, but most will sit unused like software licenses gathering dust.

This contradiction tells us that the market is full of agent washing.

You’ve seen repackaging existing automation tools as AI agents. Gartner estimates that among thousands of vendors claiming to offer AI agents, only about 130 are actually building genuinely agentic systems.

Clawdbot arrives at this moment with an answer: what does a truly useful personal AI agent look like? (Think Jarvis from the Ironman)

I started writing a research piece, ready to heavily recommend it.

Second Shift: From Excitement to Caution

Halfway through writing, I stopped.

Wait, what am I getting excited about? Technically, isn’t this just agent + tool calling? LangChain and AutoGPT were doing similar things a year ago.

I re-examined the use cases of Clawdbot that had excited me and found some problems:

The car-buying case probably took a lot of debugging to get working. The article doesn’t tell you how many times it failed in between.

Someone set up Clawdbot (the local AI agent by Peter Steinberger) to trade on Polymarket for them. Predication, with sky-high level risk.

Filing an insurance claim end‑to‑end and scheduling the repair appointment. Just, how often are you going to claim insurance and get your stuff fixed?

I looked at the actual setup requirements (you can skip this paragraph if you don’t want to get too technical).

You need a device running 24/7 (could be a $5/month VPS or a $600 Mac Mini), $20-200/month in Claude API costs, familiarity with Node.js, npm, and CLI, ability to configure OAuth, API keys, and WebSocket, and willingness to troubleshoot various edge cases. And blah, blah, you still need a modest understanding of how coding works.

The point being!!

For regular users, this is an astronomical barrier.

I noticed another detail: while its GitHub page has 80k+ stars, there are many reports in the issue section. Lots of people are asking, “I’m having issues getting Clawdbot to work with my…, or “clawdbot node install fails“.. The friction to actually get it running is significant.

In plain English, this means a lot of attempts just started it and never successfully got it up and running.

The costs are brutal, too.

A user ran Clawdbot on an M4 Mac Mini for a week and burned through 180 million AI tokens, unless you have a subscription to Claude Max at $200/month!

If you’re only occasionally automating tasks, the ROI simply doesn’t work out.

I realized we might have just observed another wave of “FOMO + echo chamber,” saw a bunch of positive reviews, and assumed the thing must be amazing.

The reality is that the problem it solves (24/7 AI assistant) is inherently a niche need. Users who can stomach the cost and technical barriers are an even smaller subset. People who can actually find sustained use cases are few and far between.

Like a gym membership, signed up but never went. (Here’s one story that will ease your 2026 AI anxiety)

You may argue use cases in enterprise, you need to note that Clawdbot has 0 AI model level breakthrough!!! So, no, cost-wise, it has little difference from other agents you’ve been trying.

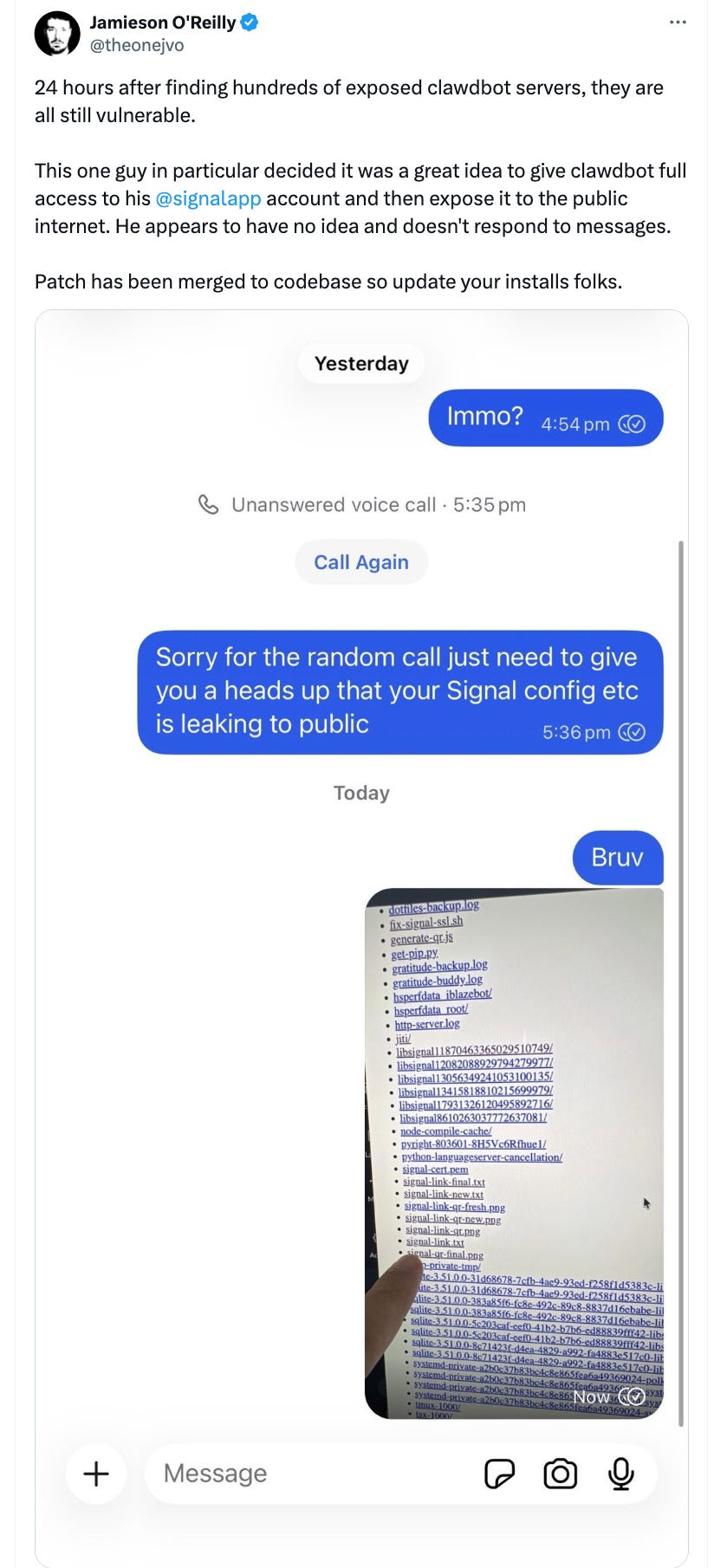

And finally, there’s a security issue.

Hundreds of Clawdbot deployments were exposed on the internet, granting attackers full access to credentials, private messages, and system commands, due to a simple misconfiguration, not an exploit.

A security researcher discovered that Clawdbot’s web admin interface was left publicly accessible without authentication in multiple cases. Attackers could view API keys, read months of private conversations (Slack, Telegram, Discord, WhatsApp), impersonate the agent’s owner, and execute commands as root.

The deeper problem: By design, AI agents need deep system access to be useful—credentials, messaging integrations, execution rights, all centralized in one place.

Even if authentication functions properly, concentrating such power renders these systems highly attractive targets. Thus, traditional security approaches (like least privilege, sandboxing, and separation) directly conflict with the qualities that make agents valuable.

This is less about Clawdbot specifically. But a fundamental architectural problem with all AI agents.

So the hype on Clawdbot really comes down to timing and packaging:

Caught the AI agent hype cycle. Early 2026, the whole industry is just at the peak of agents, and Clawdbot landed right on this trend.

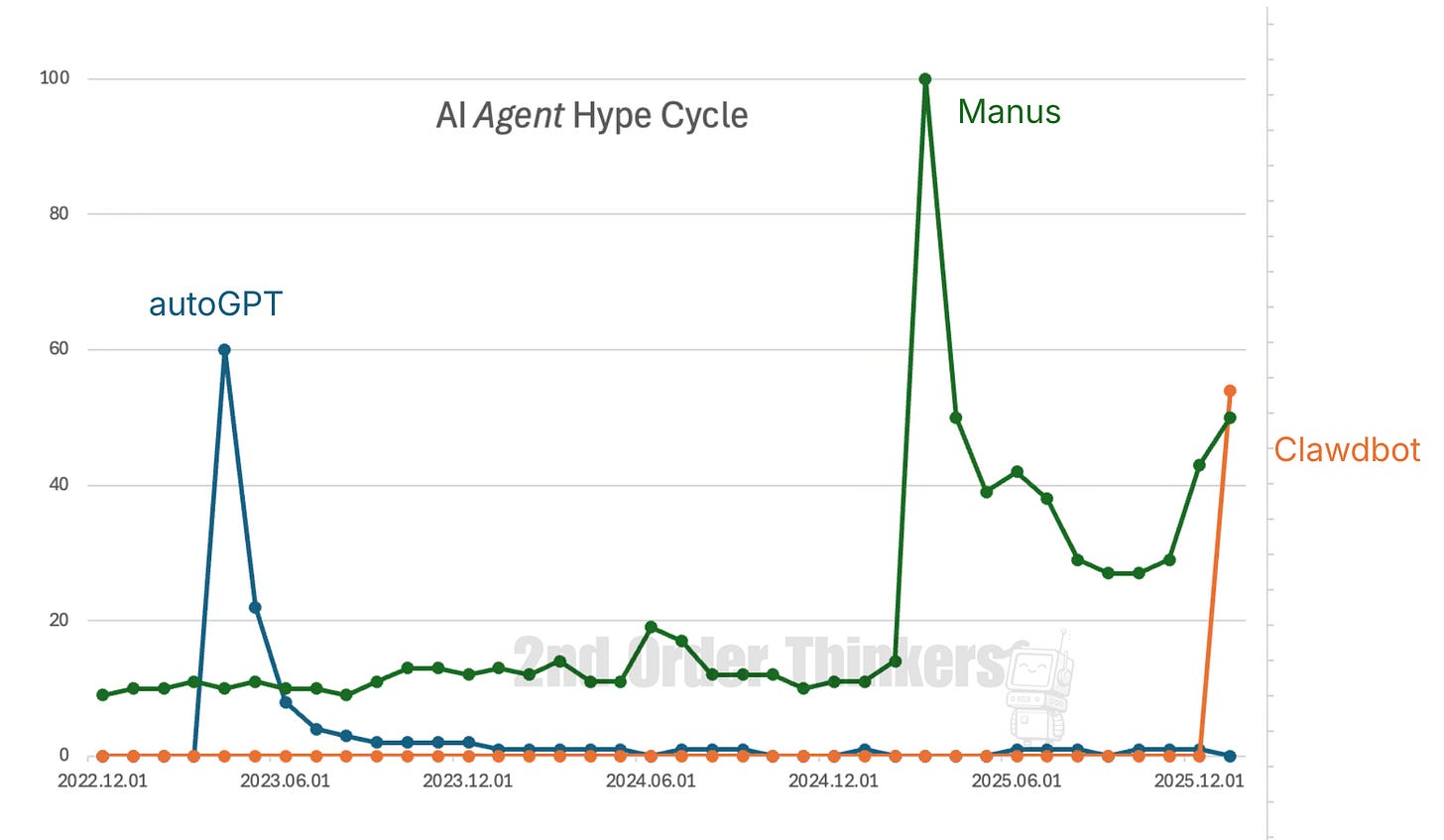

Open source community amplification effect. Hit GitHub trending, got discussed on HackerNews, a few influential developers retweeted it, and it took off. But this kind of heat often fades as fast as it came. Is it quite similar to AutoGPT exploding in April 2023 or to Manus in early 2025 (the green line, the search count went back up after Meta announced the acquisition)? How many people are still using those now?

The “finally something usable” illusion. Those showcase cases might be cherry-picked best-case scenarios.

Third Shift: A Conversation Made Me Rethink What “Innovation” Means

Right around then, I chatted with a friend about this.

She said: “I’m rarely impressed, but Clawdbot I’m definitely recommending. Just through chatting, I used Clawdbot to set up auto-transcription from my Voice Memo to Notes. I love how efficient it is! The interface is just WhatsApp, and it can directly control my Mac mini. It’s already exceeding having a PA!“

I pushed back: “Isn’t it just an agent tool calling? Why can it be so trendy?“

But her example made me stop and think.

Maybe the technology isn’t new, but the experience is.

LangChain can do agent + tool calling, but you have to write code, configure environments, deploy services, and write APIs.

However, with Clawdbot is:

You on WhatsApp:

Help me auto-transcribe a Voice Memo to Notes.

Bot:

OK, let me write a skill.

[10 minutes later]

Bot:

Done, it’ll auto-transcribe from now on.

That’s not the same thing at all.

I suddenly understood: it (has potentially) crossed the chasm from “can do“ to “will use.”

How many people actually use LangChain for daily automation? How many people actually run AutoGPT in production? Very few. Because getting from “technically possible” to “actually using it” requires enormous engineering effort and learning costs.

If Clawdbot really lowered that barrier to send a message, the difference can be exponential.

And it proved that “AI secretary” isn’t sci-fi.

What’s the value of a secretary that’s 24/7 on call (humans can’t do this, AI can); remembers everything (humans forget, AI doesn’t); executes repetitive tasks without complaining (humans get annoyed, AI doesn’t); handles multiple tasks simultaneously (humans can’t, AI can spin up multiple sub-agents).

On the cost side (most of us care most about), hiring a human secretary costs at least $1-2k per month. Clawdbot maxes out at around $150 per month.

ROI is on a completely different level.

On top of it, it’s self-extending. When you’re still waiting for the developer to update traditional software, you could just use Clawdbot:

Want a new feature → Tell it → It writes its own skill → Ready to use.

This teach it once, auto-use forever experience is genuinely new.

Also, of course, the command line and a graphical interface can both complete tasks, but a graphical interface made computers mainstream. Clawdbot might be this kind of usability breakthrough — the tech isn’t new, but I see how the ease of use has reached a qualitative change.

BUT!

Here’s the but that constructed my 3rd flip.

The deeper question is: Do you really need a 24/7 running agent at all?

Think about it carefully.

Daily auto-briefing → sure, but do you actually read it every day? Price monitoring → sure, but how many times a month does it actually trigger? Auto-reply emails → sure, but won’t that cause problems?

Truly high-frequency, high-value automation scenarios might be far fewer than you’d think.

Probably 90% of people who managed to install Clawdbot will very soon go through this cycle: Week one, excitedly trying out all the features. Week two, occasionally remember to use it. A month later, basically forgotten.

Just like smart home devices. I’ve seen too many people buy a bunch of smart gadgets and end up still using their hands to flip switches.

Because in real life, “saying a voice command” isn’t that much more convenient than “pressing a button.”

2nd Order Thinking Questions:

Writing this, I realize I might never be able to write a “standard answer” style review of this.

Before you decide whether Clawbot represents a breakthrough or just productivity theater, consider:

It can genuinely be a breakthrough, not a technical one, but an experience breakthrough. It proved that a “personal AI assistant” can go from sci-fi concept to daily tool. That demonstration is significant.

The “productivity theater” problem: People spend 10 hours configuring automation that saves them 20 minutes a week. But it feels productive. And it looks impressive in a demo.

It is overhyped! Most people probably don’t need a 24/7 running agent, can’t stomach the technical barriers and costs, and struggle to find sustained use cases.

The real use cases are probably: technical people automating workflows (CI/CD, monitoring, deployment), heavy productivity hackers (genuinely processing tons of repetitive tasks daily), and specific enterprise needs (like requiring 24/7 monitoring of certain data sources, and again, Clawdbot is no different from other agents).

And again, the “car buying assistant” → how many times a year do you buy a car? “Trading and earning you from $100 to 3x cash in one day” → risk exceeds benefit. “Organize files” → one-time task, doesn’t need 24/7.

So if you ask me, “Should I try Clawdbot?” my answer is, please, ask yourself three questions first:

Do you have truly high-frequency, repetitive, automatable tasks? (Not “might have”, but actually have.)

Can you stomach the technical barriers and debugging costs? (Be honest.)

Do you genuinely need a 24/7 operation? What for (Be specific)?

If you can answer all three with high confidence and can articulate what you have in mind, then it’s definitely worth trying.

If any answer is “not sure,” then it’s probably just FOMO talking.

After all, judging whether a tool is truly useful isn’t about how many people cheer at launch; it’s about how many people are still using it six months later.

Having written this article, my biggest takeaway isn’t about understanding Clawdbot; instead, rethinking what the word innovation means, and even better understanding the hype cycle. Read my earliest hype cycle article.

Is it true that the biggest innovation isn’t inventing new technology, but making existing technology actually usable? But what is “actually usable“ is itself a hypothesis that needs validation?

I’m still watching. How about you?

Klaas is a coauthor of this article. Klaas has this unique blend of knowledge, being a CTO, having done multiple M&A (like really involved from start to end), restructuring, and corporate finance.

Call him, and ask, “ Should I use an agent in my workflow? You’d be surprised.

Very much agree ! Went through the same myself although less well formalized. I confirm

- that the barrier to adoption is huge. If you thought Claude code was launching a rocket this one is just getting to the moon.

- the value is a relative question. If you compare to nothing yeah it’s expensive if you compare to PA it’s cheap. But it reminds me of Tim Ferris first book (what’s the name ?) where he outsourced most of his work with people in India… my work can’t be outsourced so easily

- however what I think is critical here and so why this thing is still important, it’s the ability to “self build infrastructure” . It’s step 3 of automation . Step 1 is “do it” (the prompt) step 2 is “let’s do it and convert it into a skill” while here we have step 3 “do that and build the necessary skill to be able to reproduce it quickly next time”

- security is indeed a major issue. Your data and code is local but local models are not clever enough to deal with the tasks so you may use Claude or GPT API and your data goes to the cloud so … the “private” / local claim is not appropriate

Best review I've read