You’ve seen this MIT study. Or at least you heard people talk about how 95% of organizations are getting zero return. Your LinkedIn feed is full of people sharing it with knowing nods, saying “I told you so” about the AI hype bubble.

Meanwhile, McKinsey and other consultancies are much more positive about the GenAI usage in the enterprise.

All these statistics are correct.

The issue here is that most people (even some AI celebrities/ scholars) are getting lost in the nuances of economic terms, worse, completely missing the entire point of how technology adoption actually works.

The MIT report measured “intensive margin” adoption. This means full production deployment with measurable KPIs and ROI impact measured six months post-pilot. This is like asking, “How deeply are you using it?”

Whereas the McKinsey one measured “extensive margin” adoption, which is when organizations use AI in at least one business function, regardless of scale or measurable impact. Think of this as asking, “Are you using it at all, anywhere?”

These aren’t contradictory findings.

They’re documenting different phases of the same inevitable process that has played out with every transformational technology for the last 40 years.

What actually matters is that while you’re debating success rates, marginal economic gains are pushing everyone toward adoption, whether they want it or not.

You need to see past the 95% rate as a warning about AI.

It’s confirming how AI is following the exact same adoption pattern as personal computers and the internet.

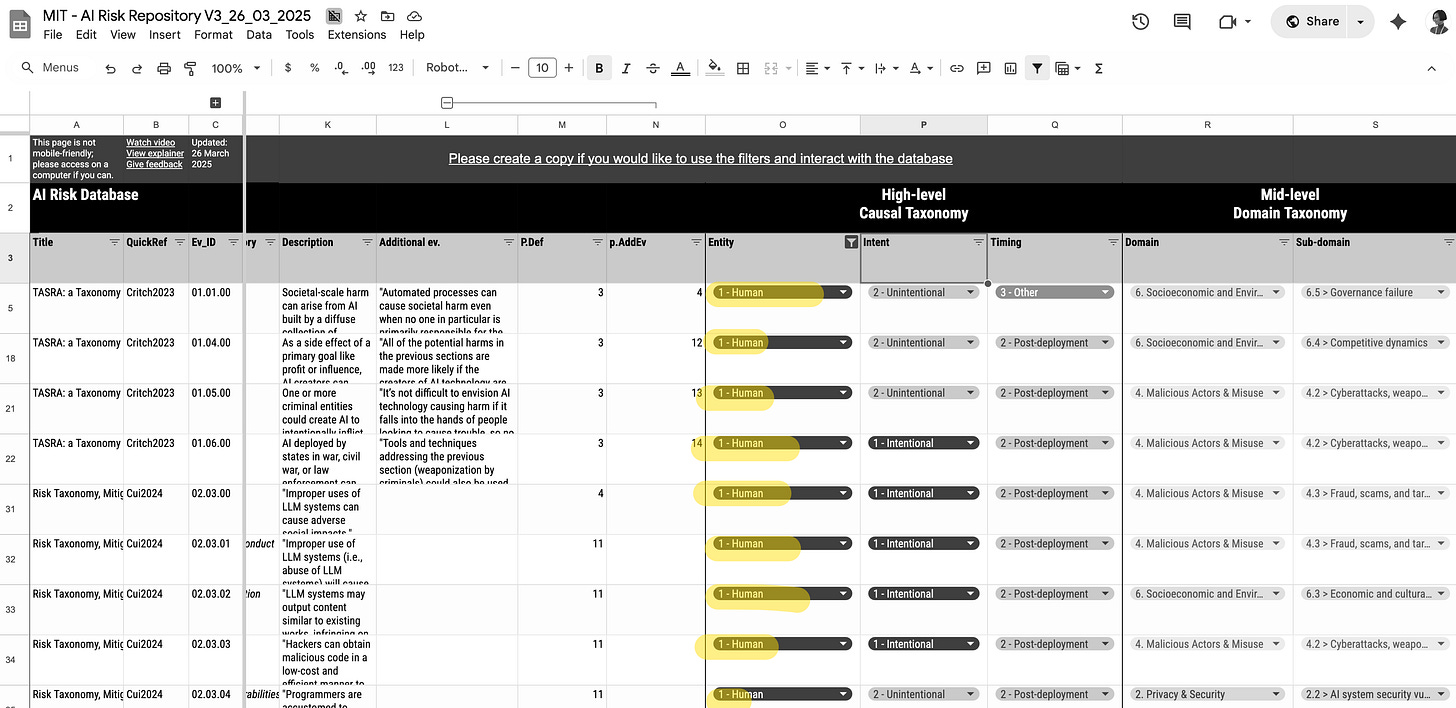

I’ve been emphasizing the harms and risks of adopting AI, knowing more than 24.1% of risks from human mistakes, intentional or not. Still, all of us need to adopt AI one way or another… which I’ll explain below.

TLDR

Q: What did the MIT study really find about AI projects?

The MIT “GenAI Divide” study found that around 95% of enterprise generative AI pilot projects fail to deliver measurable business results or progress beyond early stages, despite billions invested. Most failures happen because organizations get stuck in endless pilots, struggle with integration, or focus on flashy experiments over deep process change.Q: Is AI adoption still racing ahead despite these failures?

Yes. Companies and individuals still embrace AI tools to capture even small productivity gains, even as most major projects stall. The pace of adoption is relentless because marginal benefits, competitive pressure, and network effects drive widespread use, regardless of overall project success rates.Q: Where are we now in the history of AI adoption compared to other technologies?

AI is at the pilot phase, like PCs and the internet during their early decades, with high failure rates and immature best practices. These failures are typical for transformative technologies.Q: Does everyone have a choice about adopting AI?

No. The landscape is shaped by millions of individual and organizational decisions, creating irreversible competitive dynamics. Even if everyone sees the risks, holding back isn’t viable. The only move is to experiment early, position for future change, and learn from current mistakes.

Shall we?