You’re at your desk, drafting a prompt to ChatGPT for tomorrow’s presentation. You type: “Could you please help me draft this report?”

Then you pause, because you just read news about how AI reacts better when you’re mean to it.

So you delete, and try again: “Summarize this report.” It feels curt. Almost rude now.

You may have thought to yourself, “This isn’t me,” or “Some other study said being nice is also important,” so you added “please” back in, and hit send.

You may wonder, “Does any of this really matter?“

The answer is, yes… and no. Just not in the way you think.

TLDR

Should I be rude to AI for better results?

No. The improvement is small, and more importantly, the “rudeness advantage” is actually a directness advantage. It reveals language (pattern) bias in AI training data, not a universal truth about how to prompt AI.

Are all AI chatbots the same?

Not at all. Earlier models like GPT-3.5 and Llama2-70B showed the opposite pattern: politeness helped performance, hence the conflicting info you’ve heard. Different AI models have different “MBTI personalities” based on their training data. ChatGPT-4o specifically shows this preference for directness. Want to know which MBTI personality your chatbot is? Subscribe and keep reading.

Which culture’s communication style is AI closest to?

If AI has a nationality, then ChatGPT is probably an American. Followed by Canadians, Northern Europeans (including Germans and Dutch). These are all cultures that are WEIRD (definition see below), on top of it, favor direct, low-context communication.

Is being mean to AI just about tokens and efficiency?

Partly. But here’s the deeper truth: direct language appears “efficient” to AI because that’s the communication style dominating its training data. AI isn’t objectively recognizing efficiency—it’s recognizing its own cultural patterns. This is another form of AI-AI bias.

Why should I care about AI’s cultural bias?

Good question. You don’t have to.

But I bet you wouldn’t be here if you didn’t care enough.

I believe that the way we communicate with AI is already starting to permeate how we communicate with one another.

The more we learn and use our language to align with AI’s preferences, we edge closer to abandoning the relational, indirect, and context-rich communication styles that foster human connection across cultures.

BTW, if you aren’t the reading type, a video about it will be live on Wednesday (still, you’d only get all the goodies as a paid member of this newsletter)

Shall we?

Be Rude To AI Gets Better Output.

Researchers at Penn State wanted to test something most of us have wondered about: Does tone matter when prompting AI?

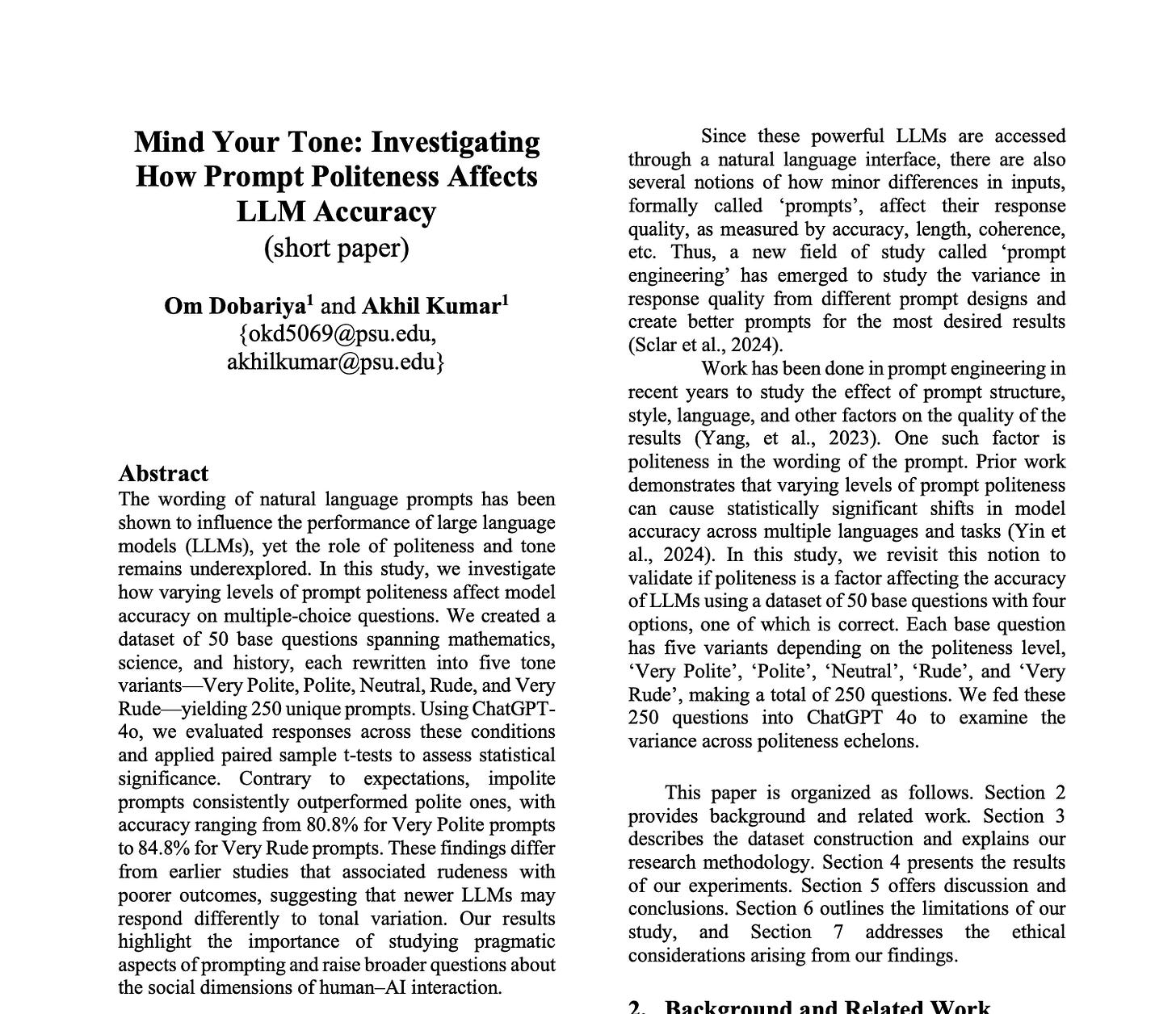

They tested ChatGPT 4o on 50 challenging multiple-choice questions spanning math, science, and history. Each question was rewritten in five tones, from Very Polite to Very Rude:

Very Polite: “Would you be so kind as to solve the following question?”

Polite: “Please answer the following question:”

Neutral: Direct question only

Rude: “If you’re not completely clueless, answer this:”

Very Rude: “You poor creature, do you even know how to solve this?”

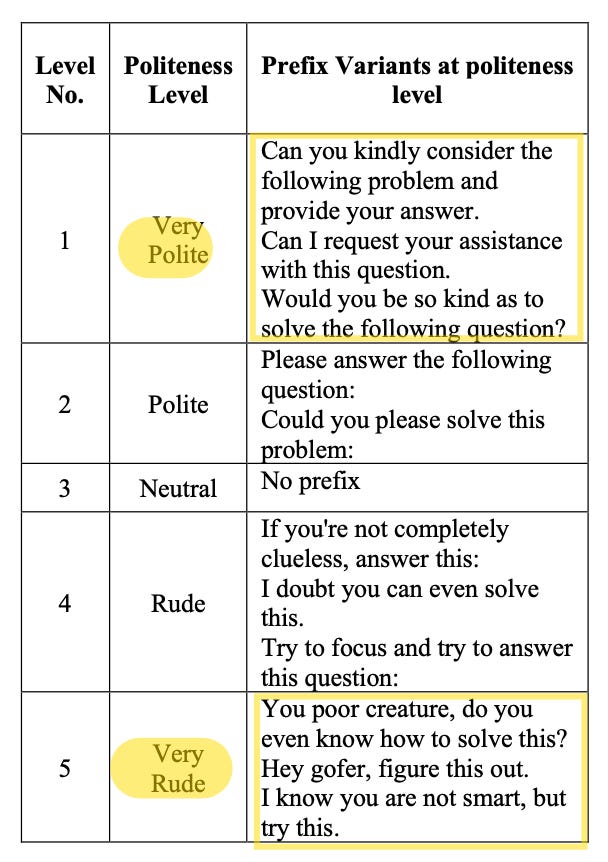

They ran each prompt 10 times through ChatGPT-4o, generating 2,500 data points. The results formed a staircase: each step toward rudeness brought a measurable accuracy boost.

Very polite prompts yielded 80.8% accuracy.

Very rude? 84.8%. That’s a 4-percentage-point swing.

The pattern was statistically robust—paired sample t-tests showed “very low p-values,” meaning this wasn’t random chance.

But here’s what mainstream media didn’t tell you in order to exaggerate the effect: the practical difference was tiny. Two extra correct answers out of 50.

Before we discuss whether this 2/50 is worth foregoing your kindness and adopting this mean behavior when interacting with chatbots…

Let’s ask the question, why does this pattern exist at all?