It’s 2035.

You wake up to an AI assistant that has already curated your news, is going to meetings using your avatar, and has all your favorite snacks and entertainment in front of you. Life feels effortless, maybe a little too effortless.

By then, AI would be in our lives in such a way more impactful than electricity, that doesn't just change our lifestyle, but reshape who we are.

“Being Human in 2035” comes from the team at Elon University, Janna Anderson and Lee Rainie, who’ve spent two decades obsessing over how technology reshapes humanity itself. If anyone’s qualified to dive into these questions, it’s them.

For this report, they gathered 301 thought leaders from across 14+ industries, handpicking voices from government, tech, media, and beyond.

As the authors’ opening: An overwhelming majority of those who wrote essays focused their remarks on the potential problems they foresee. While they said the use of AI will be a boon to society in many important – and even vital –regards, most are worried about what they consider to be the fragile future of some foundational and unique traits.

Among 61% of the respondents, the change will be deep and meaningful or fundamental and revolutionary.

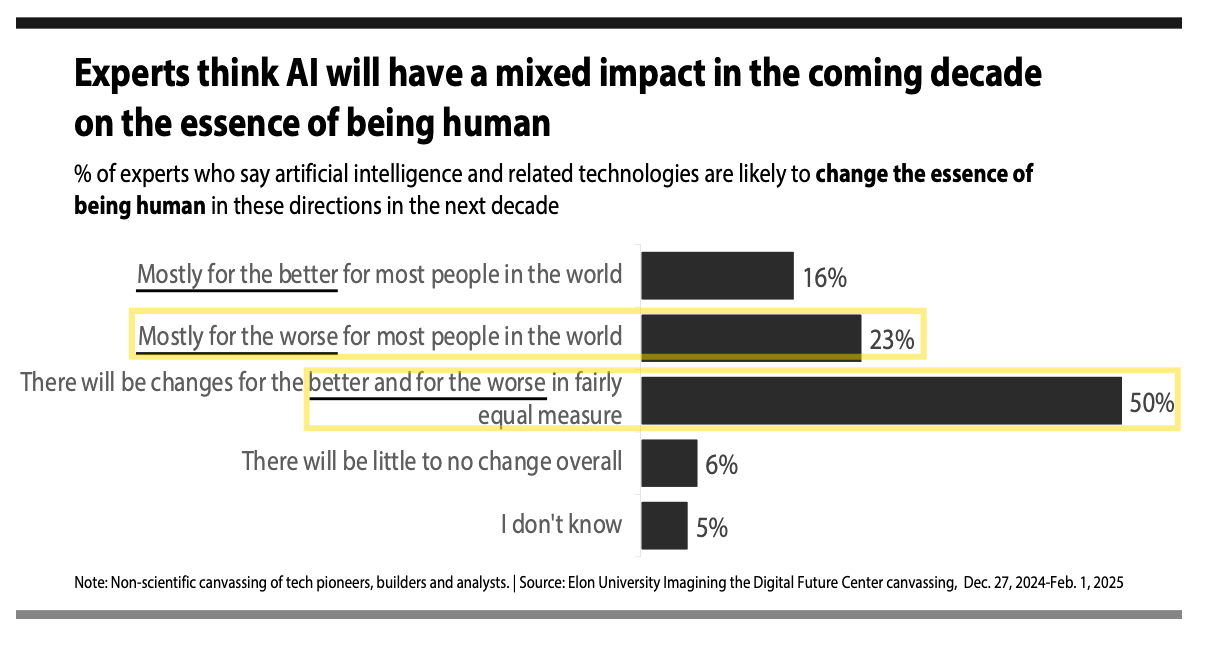

In this report, they found half of the 300+ experts believe that AI’s effect on the “essence” of being human may have a drawback for every benefit. About 23% mostly foresee a net negative impact.

While only 16% are mostly optimistic that AI will change humanity for the better.

Then the experts were asked to give an opinion on how living with AI will change 15 core traits of who we are — how we think, feel, and act — by 2035, compared to the world before advanced AI.

Questions asked such as,

How does AI shift our ability to relate, empathize, and build social bonds?

How does AI change our ability to trust information, others, and shared norms?

How does AI affect our thinking, learning, and cognitive independence?

How does AI affect our autonomy, motivation, and sense of meaning?

I broke their insights into three big buckets: mind, brain, and purpose.

Last week, our focus was on how human emotions and connections might shift in 2035, read part 1, Alone, Together.

This is Part 2, Brains on Autopilot and Where Do You Stand in the AI Hierarchy?

Your Brain on Autopilot

With AI sidekicks handling everything from drafting your emails to planning your entertainment and meals, it may boost your productivity in some ways.

Why bother since you don't need to lift a finger, look for anything for yourself? Your digital assistant will just ping someone else’s AI, and the work gets done faster and, frankly, better than you could manage.

Try to beat it and you’ll probably just end up bruising your ego.

Until you have a sudden wake-up call and realize you haven’t done any serious thinking in months…

The convenience trap we’re falling into has slowly been set up.

This report highlights five distinct traits of the human brain that are susceptible to AI influence: deep thinking, reflection, decision-making, confidence, and curiosity.

Rather than seeing these traits as isolated, think of them as steps on a spiral staircase, each one lifting you higher. Deep thinking sparks reflection, which shapes your decisions, fuels your confidence, and keeps your curiosity burning. But like any staircase, it works both ways: take the wrong turn, and you can just as easily spiral down.

These steps don’t stand alone when you start using AI. The way AI nudges your thinking can either turn this spiral into a launchpad… or a slide.

Of course, everyone wants to take the right turn, and no one wants their brain to deteriorate.

So, at the end of this article, I will reveal the real question: how to avoid it?

Shall we?